Introduction

I have been experimenting with Deep Learning models in PyTorch for a couple of weeks now. PyTorch is an open source python package that provides Tensor computation (similar to numpy) with GPU support. The dataset used for this particular blog post does no justice to the real-life usage of PyTorch for image classification. However, it serves as a general idea of how Transfer Learning can be used for more complicated image classification. Transfer learning, in a nutshell, is reusing a model developed for some other classification task, for your classification purposes. The dataset was created by scraping images from google image search.

Creating the dataset

For our dataset, we need images of birds, planes, and Superman. We will be using the icrawler package to download the images from google image search.

We repeat the same for birds and Superman. Once all the files have been downloaded, we will restructure the folders to contain our training, testing and validating samples. I am allocating 70% for training, 20% for validating and 10% for testing.

Loading the data

PyTorch uses generators to read the data. Since datasets are usually large, it makes sense to not load everything in memory. Let’s import useful libraries that we will be using for classification.

Now that we have imported useful libraries, we need to augment and normalize the images. Torchvision transforms is used to augment the training data with random scaling, rotations, mirroring and cropping. We do not need to rotate or flip our testing and validating sets. The data for each set will also be loaded with Torchivision’s DataLoader and ImageFolder.

Let us visualize a few training images to understand the data augmentation.

Loading a pre-trained model We will be using Densenet for our purposes.

The pre-trained model’s classifier takes 1920 features as input. We need to be consistent with that. However, the output feature for our case is 3 (bird, plane, and Superman).

Now, let’s create our classifier and replace the model’s classifier.

We are using ReLU activation function with random dropouts with a probability of 20% in the hidden layers. For the output layer, we are using LogSoftmax.

Training Criterion, Optimizer, and Decay

Model Training and Testing

Let us calculate the accuracy of the model without training it first.

The accuracy is pretty low at this time, which is expected. The cuda parameter here is the boolean object passed for the availability of GPU hardware in the machine.

Let us train the model.

Since GPU is supported, the training took around 10 mins. The validation accuracy is almost 99%. Let us check the accuracy over training data again.

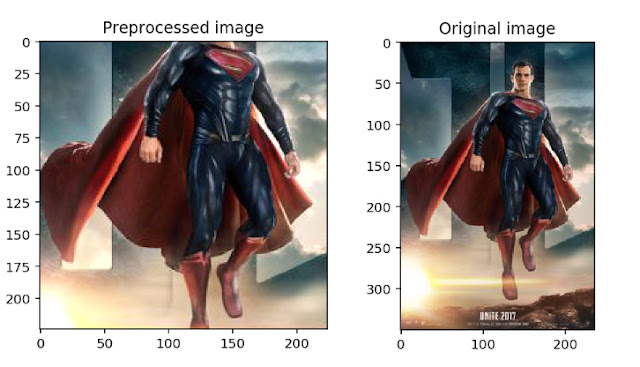

Image Preprocessing We declare a few functions to preprocess images and pass on the trained model.

Predicting by passing an image

Since our model is ready and we have built functions that allows us to visualize, let us try it out on one of the sample images.

So, that is it.