[Paper Exploration] SMOTE: Synthetic Minority Over-sampling Technique"

Author: Nitesh V. Chawla, Kevin W. Bowyer, Lawrence O. Hall, W. Philip Kegelmeyer

Published on 9 Jun 2011

Abstract

An approach to the construction of classifiers from imbalanced datasets is described. A dataset is imbalanced if the classification categories are not approximately equally represented. Often real-world data sets are predominately composed of “normal” examples with only a small percentage of “abnormal” or “interesting” examples. It is also the case that the cost of misclassifying an abnormal (interesting) example as a normal example is often much higher than the cost of the reverse error. Under-sampling of the majority (normal) class has been proposed as a good means of increasing the sensitivity of a classifier to the minority class. This paper shows that a combination of our method of over-sampling the minority (abnormal) class and under-sampling the majority (normal) class can achieve better classifier performance (in ROC space) than only under-sampling the majority class. This paper also shows that a combination of our method of over-sampling the minority class and under-sampling the majority class can achieve better classifier performance (in ROC space) than varying the loss ratios in Ripper or class priors in Naive Bayes. Our method of over-sampling the minority class involves creating synthetic minority class examples. Experiments are performed using C4.5, Ripper and a Naive Bayes classifier. The method is evaluated using the area under the Receiver Operating Characteristic curve (AUC) and the ROC convex hull strategy.

Terminologies

Imbalanced dataset

An imbalanced dataset refers to a situation where the number of examples in different classes is not evenly distributed, meaning one class has significantly fewer instances than the others.

Undersampling

Undersampling is a technique used to address this imbalance by reducing the size of the over-represented class, typically by randomly removing instances from that class.

Oversampling

Oversampling involves increasing the number of instances in the under-represented class, often by duplicating or generating new examples.

Receiver Operating Characteristic (ROC)

A standard technique for summarizing classifier performance over a range of tradeoffs between true positive and false positive error rates. The Area Under the Curve (AUC) is an accepted traditional performance metric for a ROC curve.

Main points in the paper

- Imbalanced dataset challenge prevalent in machine learning models.

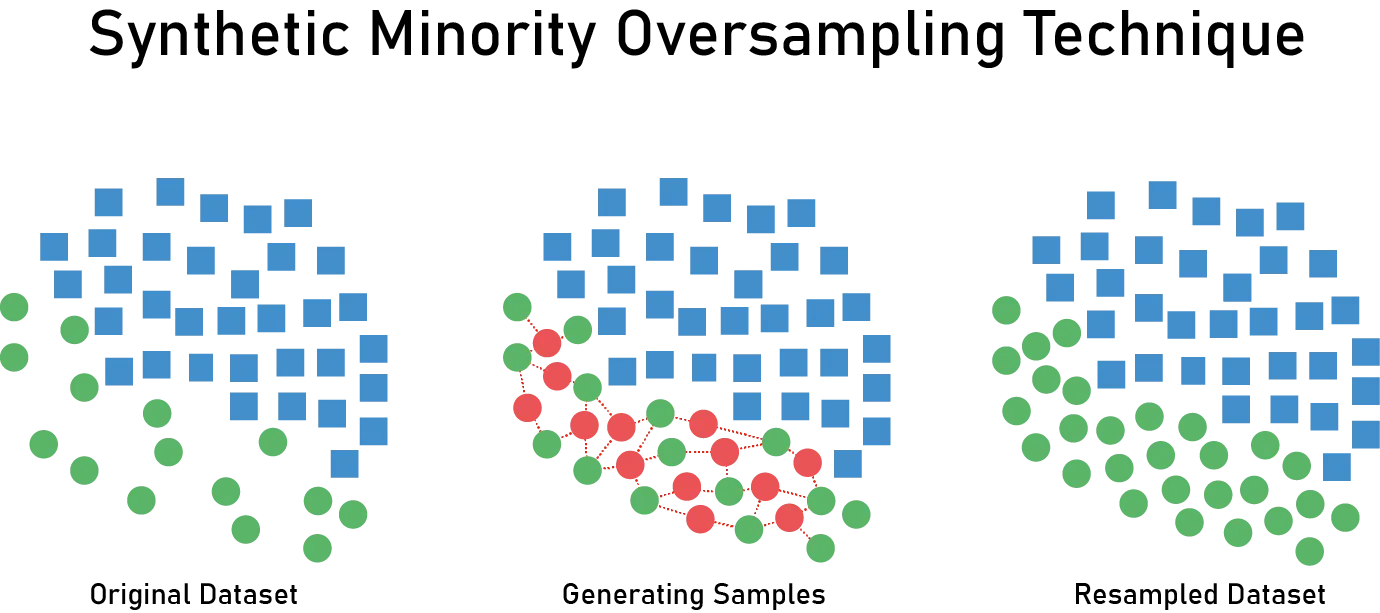

- SMOTE, or Synthetic Minority Over-sampling Technique, introduced to tackle class imbalance.

- Involves the generation of synthetic instances for the minority class.

- Utilizes interpolation techniques based on the characteristics of nearest neighbors.

- Primary objective: Improve the overall performance of machine learning models when faced with imbalanced datasets.

- Empirical validation through extensive experiments across diverse classifiers and datasets.

- Rigorous testing to establish the generalizability and effectiveness of SMOTE.

- Recognition of limitations, including the potential risk of overfitting in certain scenarios.

- Discusses strategies for adapting SMOTE to specific use cases and potential drawbacks.

- Significant impact observed in the machine learning community since its introduction in 2002.

- Widely adopted by practitioners and researchers alike.

- Acknowledged as a pioneering technique for addressing class imbalance.

- Influence on subsequent research evident in the development of various methods to handle imbalanced datasets.

- In conclusion, SMOTE stands out as a valuable and extensively utilized tool, making a substantial impact on addressing the challenges posed by imbalanced datasets in machine learning.